This blog is about: Open Source, Operating Systems (mainly linux), Networking and Electronics The information here is presented in the form of howtos. Sometimes the information migth be in portuguese! As vezes a informação pode estar em portugues!

Monday, June 1, 2009

Adding Disk - Storage To VMWare ESX

This is quite is one a big diference between VMWare ESX and the VMWare desktop, version.

So in the next few line I'm going to show how prepare and add a disk to VMWare ESX.

Step #1 Run fdisk -l and find the disk that you want to format with VMFS3.

$ su

# fdisk -l | grep Disk

Disk /dev/sda: 32.2 GB, 32212254720 bytes

Disk /dev/sdb: 53.6 GB, 53687091200 bytes

Disk /dev/sdc: 32.2 GB, 32212254720 bytes

Disk /dev/sdd: 53.6 GB, 53687091200 bytes

Disk /dev/sde: 32.2 GB, 32212254720 bytes

Disk /dev/sdf: 10.7 GB, 10737418240 bytes

Disk /dev/sdg: 32.2 GB, 32212254720 bytes

Disk /dev/sdh: 32.2 GB, 32212254720 bytes

Disk /dev/sdi: 32.2 GB, 32212254720 bytes

Disk /dev/sdj: 21.4 GB, 21474836480 bytes

Disk /dev/sdk: 214.7 GB, 214748364800 bytes Disk /dev/cciss/c0d0: 73.3 GB, 73372631040 bytes

Note: The /dev/sdk is the one I will be adding to VMWare ESX

# fdisk -l /dev/sdk

Disk /dev/sdk: 214.7 GB, 214748364800 bytes

255 heads, 63 sectors/track, 26108 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

Has you can see, sdk doesen't have any partitions,

so we must create one to later on format in the "vmf3" format.

Step #2 Creating a Partition (to format later on in "vmf3")

Note: the partition in this examples, ocupies all of the disk.

First we will create the partition (n) then change the type (t) to fb. Then (w) save the changes. Check fdisk /dev/sda again and list partitions (p) - it should list as fb.

# fdisk /dev/sdk

The number of cylinders for this disk is set to 26108.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-26108, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-26108, default 26108):

Using default value 26108

Command (m for help): t

Selected partition 1

Hex code (type L to list codes): fb

Changed system type of partition 1 to fb (Unknown)

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

Note: I tried to create an extended partition, but it didn't work, fdisk didn't let met change the type to "fb".

Step #3 Then run esxcfg-vmhbadevs -m to see which vmhba devices is mapped to the partion in step #1

# esxcfg-vmhbadevs -m

vmhba1:0:4:1 /dev/sdd1 4866618b-6a9fda41-fba6-00565aa64ffa

vmhba1:0:1:1 /dev/sda1 486660ea-fe9d98aa-8010-00565aa64ffa

vmhba1:0:3:1 /dev/sdc1 48666166-5068fbcd-dfcd-00565aa64ffa

vmhba1:0:2:1 /dev/sdb1 48666146-f15b8e96-2c49-00565aa64ffa

vmhba1:0:6:1 /dev/sdf1 486661b9-4da3f066-3017-00565aa64ffa

vmhba1:0:5:1 /dev/sde1 486661a4-9d3c1b7d-3ec6-00565aa64ffa

vmhba1:0:8:1 /dev/sdh1 486661e5-84ce84f3-acd2-00565aa64ffa

vmhba0:0:0:3 /dev/cciss/c0d0p3 48666bff-9ed64a25-636c-00215aa65f04

vmhba1:0:10:1 /dev/sdj1 4866620f-939412da-7ede-00565aa64ffa

vmhba1:0:7:1 /dev/sdg1 486661d0-debeffc1-9e08-00565aa64ffa

vmhba1:0:9:1 /dev/sdi1 486661f9-4cff8fab-57a6-00565aa64ffa

Because it has no partition, starting with "sdk" (ex: vmhba1:0:9:1 /dev/sdk1) , which is the disks name, let's try to list only the disks to see if VMWare ESX detects it,

# esxcfg-vmhbadevs

vmhba0:0:0 /dev/cciss/c0d0

vmhba1:0:1 /dev/sda

vmhba1:0:2 /dev/sdb

vmhba1:0:3 /dev/sdc

vmhba1:0:4 /dev/sdd

vmhba1:0:5 /dev/sde

vmhba1:0:6 /dev/sdf

vmhba1:0:7 /dev/sdg

vmhba1:0:8 /dev/sdh

vmhba1:0:9 /dev/sdi

vmhba1:0:10 /dev/sdj

vmhba1:0:11 /dev/sdk

The disk is the but there's no partition, we will create it later on.

Step #4 Formating the Previously Created Partition in "vmfs3"

Basicly we will run vmkfstools -C vmfs3 -S "volume name" vmhba#_from_step#3

In the step before we noted that VMWare detected sdk and that it had the id "vmhba1:0:11", but there was no partition, and that by comparing the results from "esxcfg-vmhbadevs -m" (list partitions) and "esxcfg-vmhbadevs" (list disks), in the previous step the id of the partition sdk1 should be "vmhba1:0:11:1", so this is the id we will be using in the format command below.

# vmkfstools -C vmfs3 -S "ESX03" vmhba1:0:11:1

Creating vmfs3 file system on "vmhba1:0:11:1" with blockSize 1048576 and volume label "ESX03".

Successfully created new volume: 4a23df5c-41c0dac6-a39f-00215aa64ffa

Now you have the disk ready ready to add to VMWare ESX,

Step #5 Add the storage to a Blade (fisical PC) in VMWARE ESX,

Just click on one blade "Configuration | Add Storage",

and selectct "Disk/Lun", and Next in following windows.

Note: I think if you add the disk to one blade it, will be added to all the other blades.

I tried to add to a second blade and it didn't allowed me to do that.

Step #7 Create Virtual Machines, ande select the DataStorage previously created

Listing Partitions and Disks with FDISK

In order to list all partions and disks using fdisk, while root just type:

# fdisk -l

And you will get disks and partitions informations all together:

Disk /dev/sda: 32.2 GB, 32212254720 bytes

255 heads, 63 sectors/track, 3916 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 3916 31455206 fb Unknown

Disk /dev/sdb: 53.6 GB, 53687091200 bytes

255 heads, 63 sectors/track, 6527 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk /dev/cciss/c0d0: 73.3 GB, 73372631040 bytes

255 heads, 63 sectors/track, 8920 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/cciss/c0d0p1 * 1 13 104391 83 Linux

/dev/cciss/c0d0p2 14 650 5116702+ 83 Linux

/dev/cciss/c0d0p3 651 8584 63729855 fb Unknown

/dev/cciss/c0d0p4 8585 8920 2698920 f Win95 Ext'd (LBA)

/dev/cciss/c0d0p5 8585 8653 554211 82 Linux swap

/dev/cciss/c0d0p6 8654 8907 2040223+ 83 Linux

/dev/cciss/c0d0p7 8908 8920 104391 fc Unknown

This is quite confusing if you have multiple disks with multiple partitions, so next I'm, going to show you how to, only show the disks and then, pick a disk and then list it`s partitions

## List All Disks #####

Now I'm going to show you how to list only the disks, for that while in root just type:

# fdisk -l | grep Disk

The result shold be something like this:

Disk /dev/sdk doesn't contain a valid partition table

Disk /dev/sda: 32.2 GB, 32212254720 bytes

Disk /dev/sdb: 53.6 GB, 53687091200 bytes

Disk /dev/cciss/c0d0: 73.3 GB, 73372631040 bytes

Here you only see the harddrives and no partitions, now you can pick which hardrive you want to see the partitions. Im picking /dev/cciss/c0d0, and next i will show you only it's partitions.

## List a Disk's Partitions #####

Now listing all the partitions in one disk, for that while in root just type:

# fdisk -l /dev/cciss/c0d0

The result should be something like this:

Disk /dev/cciss/c0d0: 73.3 GB, 73372631040 bytes

255 heads, 63 sectors/track, 8920 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/cciss/c0d0p1 * 1 13 104391 83 Linux

/dev/cciss/c0d0p2 14 650 5116702+ 83 Linux

/dev/cciss/c0d0p3 651 8584 63729855 fb Unknown

/dev/cciss/c0d0p4 8585 8920 2698920 f Win95 Ext'd (LBA)

/dev/cciss/c0d0p5 8585 8653 554211 82 Linux swap

/dev/cciss/c0d0p6 8654 8907 2040223+ 83 Linux

/dev/cciss/c0d0p7 8908 8920 104391 fc Unknown

That's all, happy listing...

Saturday, April 11, 2009

Recovering Data From Disks With Bad Sectors

Hack and / - When Disaster Strikes: Hard Drive Crashes

All is not necessarily lost when your hard drive starts the click of death. Learn how to create a rescue image of a failing drive while it still has some life left in it.

The following is the beginning of a series of columns on Linux disasters and how to recover from them, inspired in part by a Halloween Linux Journal Live episode titled “Horror Stories”. You can watch the original episode at www.linuxjournal.com/video/linux-journal-live-horror-stories.

Nothing teaches you about Linux like a good disaster. Whether it's a hard drive crash, a wayward rm -rf command or fdisk mistakes, there are any number of ways your normal day as a Linux user can turn into a nightmare. Now, with that nightmare comes great opportunity: I've learned more about how Linux works by accidentally breaking it and then having to fix it again, than I ever have learned when everything was running smoothly. Believe me when I say that the following series of articles on system recovery is hard-earned knowledge.

Treated well, computer equipment is pretty reliable. Although I've experienced failures in just about every major computer part over the years, the fact is, I've had more computers outlast their usefulness than not. That being said, there's one computer component you can almost count on to fail at some point—the hard drive. You can blame it on the fast-moving parts, the vibration and heat inside a computer system or even a mistake on a forklift at the factory, but when your hard drive fails prematurely, no five-year warranty is going to make you feel better about all that lost data you forgot to back up.

The most important thing you can do to protect yourself from a hard drive crash (or really most Linux disasters) is back up your data. Back up your data! Not even a good RAID system can protect you from all hard drive failures (plus RAID doesn't protect you if you delete a file accidentally), so if the data is important, be sure to back it up. Testing your backups is just as important as backing up in the first place. You have not truly backed up anything if you haven't tested restoring the backup. The methods I list below for recovering data from a crashed hard drive are much more time consuming than restoring from a backup, so if at all possible, back up your data.

Now that I'm done with my lecture, let's assume that for some reason, one of your hard drives crashed and you did not have a backup. All is not necessarily lost. There are many different kinds of hard drive failure. Now, in a true hard drive crash, the head of the hard drive actually will crash into the platter as it spins at high speed. I've seen platters after a head crash that are translucent in sections as the head scraped off all of the magnetic coating. If this has happened to you, no command I list here will help you. Your only recourse will be one of the forensics firms out there that specialize in hard drive recovery. When most people say their hard drive has crashed, they are talking about a less extreme failure. Often, what has happened is that the hard drive has developed a number of bad blocks—so many that you cannot mount the filesystem—or in other cases, there is some different failure that results in I/O errors when you try to read from the hard drive. In many of these circumstances, you can recover at least some, if not most, of the data. I've been able to recover data from drives that sounded horrible and other people had completely written off, and it took only a few commands and a little patience.

Hard drive recovery works on the assumption that not all of the data on the drive is bad. Generally speaking, if you have bad blocks on a hard drive, they often are clustered together. The rest of the data on the drive could be fine if you could only access it. When hard drives start to die, they often do it in phases, so you want to recover as much data as quickly as possible. If a hard drive has I/O errors, you sometimes can damage the data further if you run filesystem checks or other repairs on the device itself. Instead, what you want to do is create a complete image of the drive, stored on good media, and then work with that image.

A number of imaging tools are available for Linux—from the classic dd program to advanced GUI tools—but the problem with most of them is that they are designed to image healthy drives. The problem with unhealthy drives is that when you attempt to read from a bad block, you will get an I/O error, and most standard imaging tools will fail in some way when they get an error. Although you can tell dd to ignore errors, it happily will skip to the next block and write nothing for the block it can't read, so you can end up with an image that's smaller than your drive. When you image an unhealthy drive, you want a tool designed for the job. For Linux, that tool is ddrescue.

To make things a little confusing, there are two similar tools with almost identical names. dd_rescue (with an underscore) is an older rescue tool that still does the job, but it works in a fairly basic manner. It starts at the beginning of the drive, and when it encounters errors, it retries a number of times and then moves to the next block. Eventually (usually after a few days), it reaches the end of the drive. Often bad blocks are clustered together, and in the case when all of the bad blocks are near the beginning of the drive, you could waste a lot of time trying to read them instead of recovering all of the good blocks.

The ddrescue tool (no underscore) is part of the GNU Project and takes the basic algorithm of dd_rescue further. ddrescue tries to recover all of the good data from the device first and then divides and conquers the remaining bad blocks until it has tried to recover the entire drive. Another added feature of ddrescue is that it optionally can maintain a log file of what it already has recovered, so you can stop the program and then resume later right where you left off. This is useful when you believe ddrescue has recovered the bulk of the good data. You can stop the program and make a copy of the mostly complete image, so you can attempt to repair it, and then start ddrescue again to complete the image.

The first thing you will need when creating an image of your failed drive is another drive of equal or greater size to store the image. If you plan to use the second drive as a replacement, you probably will want to image directly from one device to the next. However, if you just want to mount the image and recover particular files, or want to store the image on an already-formatted partition or want to recover from another computer, you likely will create the image as a file. If you do want to image to a file, your job will be simpler if you image one partition from the drive at a time. That way, it will be easier to mount and fsck the image later.

The ddrescue program is available as a package (ddrescue in Debian and Ubuntu), or you can download and install it from the project page. Note that if you are trying to recover the main disk of a system, you clearly will need to recover either using a second system or find a rescue disk that has ddrescue or can install it live (Knoppix fits the bill, for instance).

Once ddrescue is installed, it is relatively simple to run. The first argument is the device you want to image. The second argument is the device or file to which you want to image. The optional third argument is the path to a log file ddrescue can maintain so that it can resume. For our example, let's say I have a failing hard drive at /dev/sda and have mounted a large partition to store the image at /mnt/recovery/. I would run the following command to rescue the first partition on /dev/sda:

$ sudo ddrescue /dev/sda1 /mnt/recovery/sda1_image.img

/mnt/recovery/logfile

Press Ctrl-C to interrupt

Initial status (read from logfile)

rescued: 0 B, errsize: 0 B, errors: 0

Current status

rescued: 349372 kB, errsize: 0 B, current rate: 19398 kB/s

ipos: 349372 kB, errors: 0, average rate: 16162 kB/s

opos: 349372 kB

Note that you need to run ddrescue with root privileges. Also notice that I specified /dev/sda1 as the source device, as I wanted to image to a file. If I were going to output to another hard drive device (like /dev/sdb), I would have specified /dev/sda instead. If there were more than one partition on this drive that I wanted to recover, I would repeat this command for each partition and save each as its own image.

As you can see, a great thing about ddrescue is that it gives you constantly updating output, so you can gauge your progress as you rescue the partition. In fact, in some circumstances, I prefer using ddrescue over dd for regular imaging as well, just for the progress output. Having constant progress output additionally is useful when considering how long it can take to rescue a failing drive. In some circumstances, it even can take a few days, depending on the size of the drive, so it's good to know how far along you are.

Once you have a complete image of your drive or partition, the next step is to repair the filesystem. Presumably, there were bad blocks and areas that ddrescue could not recover, so the goal here is to attempt to repair enough of the filesystem so you at least can mount it. Now, if you had imaged to another hard drive, you would run the fsck against individual partitions on the drive. In my case, I created an image file, so I can run fsck directly against the file:

$ sudo fsck -y /mnt/recovery/sda1_image.img

I'm assuming I will encounter errors on the filesystem, so I added the -y option, which will make fsck go ahead and attempt to repair all of the errors without prompting me.

Once the fsck has completed, I can attempt to mount the filesystem and recover my important files. If you imaged to a complete hard drive and want to try to boot from it, after you fsck each partition, you would try to mount them individually and see whether you can read from them, and then swap the drive into your original computer and try to boot from it. In my example here, I just want to try to recover some important files from this image, so I would mount the image file loopback:

$ sudo mount -o loop /mnt/recovery/sda1_image.img /mnt/image

Now I can browse through /mnt/image and hope that my important files weren't among the corrupted blocks.

Unfortunately in some cases, a hard drive has far too many errors for fsck to correct. In these situations, you might not even be able to mount the filesystem at all. If this happens, you aren't necessarily completely out of luck. Depending on what type of files you want to recover, you may be able to pull the information you need directly from the image. If, for instance, you have a critical term paper or other document you need to retrieve from the machine, simply run the strings command on the image and output to a second file:

$ sudo strings /mnt/recovery/sda1_image.img >

/mnt/recovery/sda1_strings.txt

The sda1_strings.txt file will contain all of the text from the image (which might turn out to be a lot of data) from man page entries to config files to output within program binaries. It's a lot of data to sift through, but if you know a keyword in your term paper, you can open up this text file in less, and then press the / key and type your keyword in to see whether it can be found. Alternatively, you can grep through the strings file for your keyword and the surrounding lines. For instance, if you were writing a term paper on dolphins, you could run:

$ sudo grep -C 1000 dolphin /mnt/recovery/sda1_strings.txt >

/mnt/recovery/dolphin_paper.txt

This would not only pull out any lines containing the word dolphin, it also would pull out the surrounding 1,000 lines. Then, you can just browse through the dolphin_paper.txt file and remove lines that aren't part of your paper. You might need to tweak the -C argument in grep so that it grabs even more lines.

In conclusion, when your hard drive starts to make funny noises and won't mount, it isn't necessarily the end of the world. Although ddrescue is no replacement for a good, tested backup, it still can save the day when disaster strikes your hard drive. Also note that ddrescue will work on just about any device, so you can use it to attempt recovery on those scratched CD-ROM discs too.

Kyle Rankin is a Senior Systems Administrator in the San Francisco Bay Area and the author of a number of books, including Knoppix Hacks and Ubuntu Hacks for O'Reilly Media. He is currently the president of the North Bay Linux Users' Group.

Taken From: Linux Journal, Issue 179, March 2009 - Hack and / - When Disaster Strikes: Hard Drive Crashes

Friday, April 10, 2009

Making Web Pages In Java with Google Web Toolkit

Web 2.0 Development with the Google Web Toolkit

There's much hype related to Web 2.0, and most people agree that software like Google Maps, Gmail and Flickr fall into that category. Wouldn't you like to develop similar programs allowing users to drag around maps or refresh their e-mail inboxes, all without ever needing to reload the screen?

Until recently, creating such highly interactive programs was, to say the least, difficult. Few development tools, little debugging help and browser incompatibilities all added up to a complex mix. Now, however, if you want to produce such cutting-edge applications, you can use modern software methodologies and tools, work with the high-level Java language, and forget about HTML, JavaScript and whether Firefox and Internet Explorer behave the same way. The Google Web Toolkit (GWT) makes it easy to do a better job and produce more modern Web 2.0 programs for your users.

This question has several answers, including Sir Tim Berners-Lee's (the creator of the World Wide Web) view that it's just a reuse of components that were there already. It originally was coined by Tim O'Reilly, promoting “the Web as a platform”, with data as a driving force and technologies fostering innovation by assembling systems and sites that get information and features from distributed, different, independent developers and services.

This notion goes along with the idea of letting users run applications entirely through a browser, without installing anything on their machines. These new programs usually feature rich, user-friendly interfaces, akin to the ones you would get from an installed program, and they generally are achieved with AJAX (see the What Is AJAX? sidebar) to reduce download times and speed up display time.

Web 2.0 applications use the same infrastructure that developers are largely already familiar with: dynamic HTML, CSS and JavaScript. In addition, they often use XML or JSON for representing and communicating data between the server and browser. This data communication is often done using Web service requests via the DOM API XMLHttpRequest.

What Is AJAX?

The standard model for Web applications is something like this: you get a screenful of text and fields from a server, you fill in some fields, and when you click a button, the browser sends the data you typed to a server (wait), which processes it (wait), and sends back an answer (wait), which your browser displays, and then the cycle restarts. This is by far the most common way Web applications operate, and you must get used to the delays. Nothing happens immediately, because every answer that needs data from a server requires a round trip.

AJAX (Asynchronous JavaScript And XML) is a technique that lets a Web application communicate in the background (asynchronously) with a Web server to exchange (send or receive) data with it. This does away with the requirement to reload the whole page after every action or user click. Thus, using AJAX increases the level of interaction, does away with waiting for pages to reload and allows for enhanced functionality. A well-programmed application will send requests in the background, as you are doing other things, so you won't have to stare at a blank screen or a turning-hourglass cursor. This is the Asynchronous part of AJAX acronym.

The next part of the AJAX acronym is JavaScript. JavaScript allows a Web page to contain a program, and this program is what allows the Web page to connect to a server as previously described. However, it's not just a question of having JavaScript, but also of how it is implemented in the browser. Both Firefox and Internet Explorer both provide AJAX access, but with some differences, so programmers must take those differences into account when doing the connection. Data is usually retrieved using XMLHttpRequest, but other techniques are possible, such as using iframes.

Finally, the last part of the AJAX acronym is XML. XML is a standard markup language, used for sharing and passing information. As we've seen, the name of the DOM API for making Web service requests is named XMLHttpRequest, and most likely, the original intent was that XML be used as the protocol for exchanging data between browser and server. However, neither the X in AJAX nor the XML in XMLHttpRequest means that you have to use XML; any data protocol at all, including no protocol, can be used.

JSON (JavaScript Object Notation) often is used; it's more lightweight than XML, and as you might guess by its name, is often a better fit for JavaScript. See Figure 3 for some actual JSON code; remember, it's not meant to be clear to humans, but compact and easy to understand for machines.

AJAX comprises basic technologies that have been around for a while now, and the AJAX term itself was created in 2005 by Jesse Garrett. GWT uses AJAX to allow the client program to communicate with the server or execute procedures on it in a fully transparent way. Of course, you also can use AJAX explicitly for any special purposes you might have.

The Google Web Toolkit (GWT—rhymes with “nitwit”) is a tool for Web programmers. Its first public appearance was in May 2006 at the JavaOne conference. Currently (at the time of this writing), version 1.5.3 has just been released. It is licensed mainly under the Apache 2.0 Open Source License, but some of its components are under different licenses. Don't confuse JavaScript with Java; despite the name, the languages are unrelated, and the similarities come from some common roots.

In short, GWT makes it easier to write high-performing, interactive, AJAX applications. Instead of using the JavaScript language (which is powerful, but lacking in areas like modularity and testing features, making the development of large-scale systems more difficult), you code using the Java language, which GWT compiles into optimized, tight JavaScript code. Moreover, plenty of software tools exist to help you write Java code, which you now will be able to use for testing, refactoring, documenting and reusing—all these things have become a reality for Web applications.

You also can forget about HTML and DHTML (Dynamic HTML, which implies changing the actual source code of the page you are seeing on the fly) and some additional subtle compatibility issues therein. You code using Java widgets (such as text fields, check boxes and more), and GWT takes care of converting them into basic HTML fields and controls. Don't worry about localization matters either; with GWT, it's easy to produce locale-specific versions of code.

There's another welcome bonus too. GWT takes care of the differences between browsers, so you don't have to spend time writing the same code in different ways to please the particular quirks of each browser. Typically, if you just code away and don't pay attention to those small details, your site will end up looking fine in, say, Mozilla Firefox, but won't work at all in Internet Explorer or Safari. This is a well-known classic Web development problem, and it's wise to plan for compatibility tests before releasing any site. GWT lets you forget about those problems and focus on the task instead.

According to its developers, GWT produces high-quality code that matches (and probably surpasses) the quality (size and speed) of handwritten JavaScript. The GWT Web page contains the motto “Faster AJAX than you can write by hand!”

GWT also endeavors to minimize the resulting code size to speed up transfers and shorten waiting time. By default, the end code is mostly unreadable (being geared toward the browser, not a snooping user), but if you have any problems, you can ask for more legible code so you can understand the relationship between your Java code and the produced JavaScript.

Before installing GWT, you should have a few things already installed on your machine:

Java Development Kit (JDK), so you can compile and test Java applications; several more tools also are included.

Java Runtime Environment (JRE), including the Java Virtual Machine (JVM) and all the class libraries required for production and development environments.

A development environment—Google's own developers use Eclipse, so you might want to follow suit. Or, you can install GWT4NB and do some tweaking and fudging and work with NetBeans, another popular development environment.

GWT itself weighs in at about 27MB; after downloading it, extract it anywhere you like with tar jxf ../gwt-linux-1.5.3.tar.bz2. No further installation steps are required. You can use GWT from any directory.

For this article, I used Eclipse. For more serious work, you probably also will require some other additions, such as the Data Tools Platform (DTP), Eclipse Java Development Tools (JDT), Eclipse Modeling Framework (EMF) and Graphical Editing Framework (GEF), but you easily can add those (and more) with Eclipse's own software update tool (you can find it on Eclipse's main menu, under Help—and no, I don't know why it is located there).

Before starting a project, you should understand the four components of GWT:

When you are developing an application, GWT runs in hosted mode and provides a Web browser (and an embedded Tomcat Web server), which allows you to test your Java application the same way your end users would see it. Note that you will be able to use the interactive debugging facilities of your development suite, so you can forget about placing alert() commands in JavaScript code.

To help you build an interface, there is a Web interface library, which lets you create and use Web browser widgets, such as labels, text boxes, radio buttons and so on. You will do your Java programming using those widgets, and the compilation process will transform them into HTML-equivalent ones.

Because what runs in the client's browser is JavaScript, there needs to be a Java emulation library, which provides JavaScript-equivalent implementations of the most common Java standard classes. Note that not all of Java is available, and there are restrictions as to which classes you can use. It's possible that you will have to roll your own code if you want to use an unavailable class. As of version 1.5, GWT covers much of the JRE. In addition, as of version 1.5, GWT supports using Java 5.

Finally, in order to deploy your application, there is a Java-to-JavaScript compiler (translator), which you will use to produce the final Web code. You will need to place the resulting code, the JavaScript, HTML and CSS on your Web server later, of course.

If you are like most programmers, you probably will be wondering about your converted application's performance. However, GWT generates ultra-compact code that can be compressed and cached further, so end users will download a few dozen kilobytes of end code, only once. Furthermore, with version 1.5, the quality of the generated code is approaching (and even surpassing) the quality of handwritten JavaScript, especially for larger projects. Finally, because you won't need to waste time doing debugging for every existing Web browser, you will have more time for application development itself, which lets you produce more features and better applications.

Packages

Using GWT requires learning about several packages. The most important ones are:

com.google.gwt.http.client: provides the client-side classes for making HTTP requests and processing the received responses. You will use it if you need to do some AJAX on your own, beyond the calls done by GWT itself.

com.google.gwt.i18n.client: provides internationalization support. You will need it if you are developing a system that will be available in several languages.

com.google.gwt.json.client and com.google.gwt.xml.client: used for parsing and reading XML and JSON data.

com.google.gwt.junit.client: used for building automated JUnit tests.

com.google.gwt.user.client.ui: provides panels, buttons, text boxes and all the other user-interface elements and classes. You certainly will use these.

com.google.gwt.user.client.rpc and com.google.gwt.user.server.rpc: these have to do with remote procedure calls (RPCs). GWT allows you to call server code transparently, as if the client were residing in the same machine as the server.

You can find information on these and other packages on-line, at google-web-toolkit.googlecode.com/svn/javadoc/1.5/index.html.

Now, let's turn to a practical example. Creating a new project is done with the command line rather than from inside Eclipse. Create a directory for your project, and cd to it. Then create a project in it, with:

/path/to/GWT/projectCreator -eclipse ProjectName

Next, create a basic empty application, with:

/path/to/GWT/applicationCreator -eclipse ProjectName \

com.CompanyName.client.ApplicationName

Then, open Eclipse, go to File→Import→General, choose Existing Projects into Workspace, and select the directory in which you created your project. Do not check the Copy Projects into Workspace box so that the project will be left at the directory you created.

After doing this, you will be able to edit both the HTML and Java code, add new classes and test your program in hosted mode, as described earlier. When you are satisfied with the final product, you can compile it (an appropriate script was generated when you created the original project) and deploy it to your Web server.

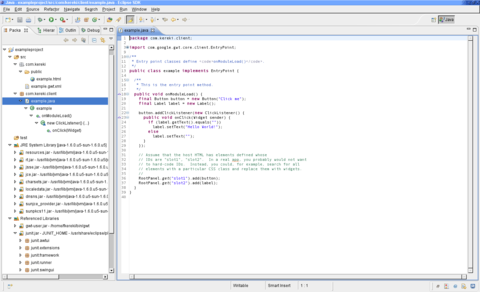

Let's do an example mashup. We're going to have a text field, the user will type something there, and we will query a server (okay, with only one server, it's not much of a mashup, but the concept can be extended easily) and show the returned data. Of course, for a real-world application, we wouldn't display the raw data, but rather do further processing on it. The example project itself will be called exampleproject, and its entry point will be example, see Listing 1 and Figure 1.

Listing 1. Projects must be created by hand, outside Eclipse, and imported into it later.

# cd

# md examplefiles

# cd examplefiles

# ~/bin/gwt/projectCreator -eclipse exampleproject

Created directory ~/examplefiles/src

Created directory ~/examplefiles/test

Created file ~/examplefiles/.project

Created file ~/examplefiles/.classpath

# ~/bin/gwt/applicationCreator -eclipse exampleproject \

com.kereki.client.example

Created directory ~/examplefiles/src/com/kereki

Created directory ~/examplefiles/src/com/kereki/client

Created directory ~/examplefiles/src/com/kereki/public

Created file ~/examplefiles/src/com/kereki/example.gwt.xml

Created file ~/examplefiles/src/com/kereki/public/example.html

Created file ~/examplefiles/src/com/kereki/client/example.java

Created file ~/examplefiles/example.launch

Created file ~/examplefiles/example-shell

Created file ~/examplefiles/example-compile

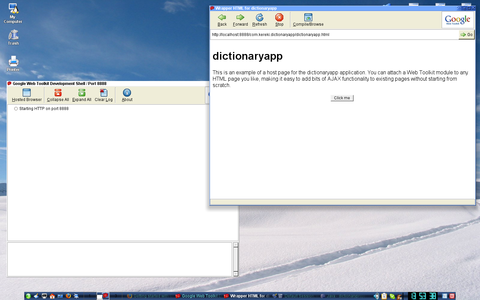

According to the Getting Started instructions on the Google Web Toolkit site, you should click the Run button to start running your project in hosted mode, but I find it more practical to run it in debugging mode. Go to Run→Debug, and launch your application. Two windows will appear: the development shell and the wrapper HTML window, a special version of the Mozilla browser. If you do any code changes, you won't have to close them and relaunch the application. Simply click Refresh, and you will be running the newer version of your code.

Now, let's get to our changes. Because we're using JSON and HTTP, we need to add a pair of lines:

to the example.gwt.xml file. We'll rewrite the main code and add a couple packages to do calls to servers that provide JSON output (see The Same Origin Policy sidebar). For this, add two classes to the client: JSONRequest and JSONRequestHandler; their code is shown in Listings 2 and 3.

The Same Origin Policy

The Same Origin Policy (SOP) is a security restriction, which basically prevents a page loaded from a certain origin to access a page from a different origin. By origin, we mean the trio: protocol + host + port. In http://www.mysite.com:80/some/path/to/a/page, the protocol is http, the host is www.myhost.com, and the port is 80. The SOP would allow access to any document coming from http://www.mysite.com:80, but disallow going to https://www.mysite.com:80/something (different protocol), http://dev.mysite.com:80/something (different host) or http://www.mysite.com:81/something (different port).

Why is this a good idea? Without it, it would be possible for JavaScript from a certain origin to access data from another origin and manipulate it secretly. This would be the ultimate phishing. You could be looking at a legitimate, valid, true page, but it might be monitored by a third party. With SOP in place, you know for certain that whatever you are viewing was sent by the true origin. There can't be any code from other origins.

Of course, for GWT, this is a bit of a bother, because it means that a client application cannot simply connect to any other server or Web service to get data from it. There are (at least) two ways around this: a special, simpler way that allows getting JSON data only or a more complex solution that implies coding a server-side proxy. Your client calls the proxy, and the proxy calls the service. Both solutions are explained in the Google Web Toolkit Applications book (see Resources). In this article, we use the JSON method, and you can find the source code at www.gwtsite.com/code/webservices.

The simple JSON method requires a special callback routine, and this could be a showstopper. However, many sites implement this, including Amazon, Digg, Flickr, GeoNames, Google, Yahoo! and YouTube, and the method is catching on, so it's quite likely you will be able to find an appropriate service.

Listing 2. Source Code for the JSONRequest Class

package com.kereki.client;

public class JSONRequest {

public static void get(String url,

JSONRequestHandler handler) {

String callbackName = "JSONCallback"+handler.hashCode();

get(url+callbackName, callbackName, handler);

}

public static void get(String url, String callbackName,

JSONRequestHandler handler) {

createCallbackFunction(handler, callbackName);

addScript(url);

}

public static native void addScript(String url) /*-{

var scr = document.createElement("script");

scr.setAttribute("language", "JavaScript");

scr.setAttribute("src", url);

document.getElementsByTagName("body")[0].appendChild(scr);

}-*/;

private native static void createCallbackFunction(

JSONRequestHandler obj,

String callbackName) /*-{

tmpcallback = function(j) {

obj.@com.kereki.client.JSONRequestHandler::

onRequestComplete(

Lcom/google/gwt/core/client/JavaScriptObject;)(j);

};

eval( "window." + callbackName + "=tmpcallback" );

}-*/;

}

Note that the last two methods are written in JavaScript instead of Java; the JavaScript code is written inside Java comments. The special @id... syntax inside the JavaScript is used for accessing Java methods and fields from JavaScript. This syntax is translated to the correct JavaScript by GWT when the application is compiled. See the GWT documentation for more information.

Listing 3. Source Code for the JSONRequestHandler Class

package com.kereki.client;

import com.google.gwt.core.client.JavaScriptObject;

public interface JSONRequestHandler {

public void onRequestComplete(JavaScriptObject json);

}

You can find the code for this listing and the previous one at www.gwtsite.com/code/webservices.

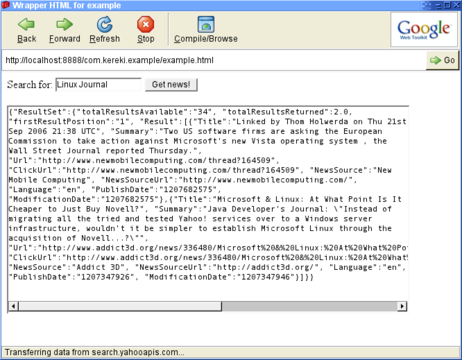

Let's opt to create the screen completely with GWT code. The button will send a request to a server (in this case, Yahoo! News) that provides an API with JSON results. When the answer comes in, we will display the received code in a text area. The complete code is shown in Listing 4, and Figure 3 shows the running program.

Listing 4. Source Code for the Main Program

package com.kereki.client;

import com.google.gwt.core.client.EntryPoint;

import com.google.gwt.core.client.JavaScriptObject;

import com.google.gwt.user.client.ui.*;

import com.google.gwt.json.client.*;

import com.google.gwt.http.client.URL;

import com.kereki.client.JSONRequest;

import com.kereki.client.JSONRequestHandler;

public class example implements EntryPoint {

public void onModuleLoad() {

final TextBox tbSearchFor = new TextBox();

final TextArea taJsonResult = new TextArea();

taJsonResult.setCharacterWidth(80);

taJsonResult.setVisibleLines(20);

final HorizontalPanel hp1 = new HorizontalPanel();

Button bGetNews = new Button("Get news!",

new ClickListener() {

public void onClick(Widget sender) {

JSONRequest.get(

"http://search.yahooapis.com/"+

"NewsSearchService/V1/newsSearch?"+

"appid=YahooDemo&query="+

URL.encode(tbSearchFor.getText())+

"&results=2&language=en"+

"&output=json&callback=",

new JSONRequestHandler() {

public void onRequestComplete(

JavaScriptObject json) {

JSONObject jj= new JSONObject(json);

taJsonResult.setText(jj.toString());

};

}

);

}

});

hp1.add(new Label("Search for:"));

hp1.add(new HTML(" ",true));

hp1.add(tbSearchFor);

hp1.add(new HTML(" ",true));

hp1.add(bGetNews);

RootPanel.get().add(hp1);

RootPanel.get().add(new HTML("

",true));

RootPanel.get().add(taJsonResult);

}

}

The code in Listing 4 shows access to a single service, but it would be easy to connect to several sources at once and produce a mashup of news.

After testing the application, it's time to distribute it. Go to the directory where you created the project, run the compile script (in this case, example_script.sh), and copy the resulting files to your server's Web pages directory. In my case, with OpenSUSE, it's /srv/www/htdocs, but with other distributions, it could be /var/www/html (Listing 5). Users could use your application by navigating to http://127.0.0.1/com.kereki.example/example.html, but of course, you probably will select another path.

Listing 5. Compiling the Code and Deploying the Files to Your Server

# cd ~/examplefiles/

# sh ./example-compile

Output will be written into ./www/com.kereki.example

Copying all files found on public pathCompilation succeeded

# sudo cp -R ./www/com.kereki.example /srv/www/htdocs/

We have written a Web page without ever writing any HTML or JavaScript code. Moreover, we did our coding in a high-level language, Java, using a modern development environment, Eclipse, full of aids and debugging tools. Finally, our program looks quite different from classic Web pages. It does no full-screen refreshes, and the user experience will be more akin to that of a desktop program.

GWT is a very powerful tool, allowing you to apply current software engineering techniques to an area that is lacking good, solid development tools. Being able to apply Java, a high-level modern language, to solve both client and server problems, and being able to forget about browser quirks and incompatibilities, should be enough to make you want to give GWT a spin.

Resources

Google Web Toolkit Applications by Ryan Dewsbury, Prentice-Hall, 2008.

Google Web Toolkit for AJAX by Bruce Perry, PDF edition, O'Reilly, 2006.

Google Web Toolkit Java AJAX Programming by Prabhakar Chaganti, Packt Publishing, 2007.

Google Web Toolkit Solutions: Cool & Useful Stuff by David Geary and Rob Gordon, PDF edition, Prentice Hall, 2007.

Google Web Toolkit Solutions: More Cool & Useful Stuff by David Geary and Rob Gordon, Prentice Hall, 2007.

Google Web Toolkit—Taking the Pain out of AJAX by Ed Burnett, PDF edition, The Pragmatic Bookshelf, 2007.

GWT in Action: Easy AJAX with the Google Web Toolkit by Robert Hanson and Adam Tacy, Manning, 2007.

AJAX: a New Approach to Web Applications: www.adaptivepath.com/ideas/essays/archives/000385.php

AJAX: Getting Started: developer.mozilla.org/en/docs/AJAX:Getting_Started

AJAX Tutorial: www.xul.fr/en-xml-ajax.html

Apache 2.0 Open Source License: code.google.com/webtoolkit/terms.html

Eclipse: www.eclipse.org

Google Web Toolkit: code.google.com/webtoolkit

GWT4NB, a Plugin for GWT Work with NetBeans: https://gwt4nb.dev.java.net

Java SE (Standard Edition): java.sun.com/javase

Java Development Kit (JDK): java.sun.com/javase/downloads/index.jsp

JSON: www.json.org

JSON: the Fat-Free Alternative to XML: www.json.org/xml.html

NetBeans: www.netbeans.org

Same Origin Policy, from Wikipedia: en.wikipedia.org/wiki/Same_origin_policy

Web 2.0, from Wikipedia: en.wikipedia.org/wiki/Web_2

What Is Web 2.0?, by Tim O'Reilly: www.oreillynet.com/pub/a/oreilly/tim/news/2005/09/30/what-is-web-20.html

XML: www.xml.org

Federico Kereki is a Uruguayan Systems Engineer, with more than 20 years' experience teaching at universities, doing development and consulting work, and writing articles and course material. He has been using Linux for many years now, having installed it at several different companies. He is particularly interested in the better security and performance of Linux boxes.

Taken From: Linux Journal, Issue: 179, February 2009 - Web 2.0 Development with the Google Web Toolkit

Download, Store and Install Packages in Ubuntu Automaticly

So I have made a couple off scripts in bash language, which I had never used before, so these migth no be the best scripts in the world but they get the jobe done.

The first script (download_and_store) to download and store in folders all of my favorite apps, and another that installs every apps (install_all) on those folders.

download_and_store

--------------------------------------------------------------------------------------------------

#!/bin/bash

## List of packages to download ####

L_PACKAGES_TO_DOWNLOAD="

vlc

mplayer

amarok

wireshark

k3b

"

################################

D_APTGET_CACHE="/var/cache/apt/archives"

echo "Where will you want to store the packages"

read D_DOWNLOADED_PACKAGES

# clean apt-get's cache

apt-get clean

# create the root directory for the downloaded package ##

mkdir -p $D_DOWNLOADED_PACKAGES

for i in $L_PACKAGES_TO_DOWNLOAD ; do ## go through all the packages on the list

## download apt-get packages whithout instaling them (apt-get cache) ##

apt-get install -d $i

## create the dir for the downloaded package ##

mkdir $D_DOWNLOADED_PACKAGES/$i

## move de downloaded package on apt-get chache to the created dir ##

mv $D_APTGET_CACHE/*.deb $D_DOWNLOADED_PACKAGES/$i

# clean apt-get's cache

apt-get clean

done

install_all

----------------------------------------------------------------------

#!/bin/bash

for i in $( ls -p | grep "/" ); do ## go through every dir

echo ">>>>>>>>>>>>>>>>>>>>>"

cd $i ## enter a dir (where the packages and dependecies are)

echo Dir Actual: $(pwd)

dpkg -i *.deb ## install all debs (package and its dependencies)

cd ..

echo "<<<<<<<<<<<<<<<<<<<<<" done

Now for the demonstration, lets use download_and_store to download all of you favorite apps.

# create a file for download_and_store #####

$ sudo gedit download_and_store

paste the script above, and change the list L_PACKAGES_TO_DOWNLOAD, to include you favorite packages (these are separeted by a space or newline).

# give the script permitions to execute #####

$ sudo chmod 777 /path_to_it/download_and_store

# execute download_and_store

$ cd /path_to_it

$ ./download_and_store

Where will you want to store the packages

/home/my_user/Desktop/saved_apps --> you chose this dir

Now just wait...

Once its over you will have in /home/my_user/Desktop/saved_apps a folder for each application, for example vlc, you wil have a dir named vlc whith the vlc package and all of it's dependencies.

============================

Now, lets use install_all to install all of your downloaded apps.

# create a file for install_all #####

$ sudo gedit /home/my_user/Desktop/saved_apps/install_all

as you can see install_all must be in the root dir you inputed earlier ( /home/my_user/Desktop/saved_apps), this script will install all he can find in the dirs below.

# give the script permitions to execute #####

$ sudo chmod 777 /home/my_user/Desktop/saved_apps/install_all

# execute download_and_store

$ cd /home/my_user/Desktop/saved_apps/

$ ./install_all

Now wait...

There you apps should all be installed.

These scripts are very basic, these are my first in bash programing, and aren't fully tested, but if you can get an idea from them, or even improve them I'm happy.

Thursday, April 9, 2009

Installing ZenOSS on Ubuntu 8.10 (Hardy Heron)

# Install Apache With It's Documentation #####

$ sudo apt-get install apache2 apache2-doc

# Start Apache (it should already be started) #####

$ sudo /etc/init.d/apache2 start

# Test Apache #####

Type on Mozilla Firefox: http://127.0.0.1/

It souhld read: It works!

# Instaling MySQL and PHP necessary Dependencies #####

$ sudo apt-get install mysql-server mysql-client

Type in mySQL's root password in the upcoming textbox.

# Instaling SNMP Query tools #####

$ sudo apt-get install snmp

# Downloading ZenOSS #####

In http://www.zenoss.com/download/links?creg=no

you can see all the suported distributions,

you just have to pick yours if it's there,

otherwise pick the closets.

In runing Ubuntu 8.10, which isnt there so I go for

the Ubuntu 8.04, here's the link:

http://sourceforge.net/project/downloading.php?groupname=zenoss&filename=zenoss-stack-2.3.3-linux.bin&use_mirror=freefr

# Installing ZenOSS #####

$ cd /path_to_zenoss_executable_dir/

$ sudo chmod 777 zenoss-stack-2.3.3-linux.bin

$ sudo ./zenoss-stack-2.3.3-linux.bin

Type in the data the installer gui asks you, like the

database root login.

# Logging in into ZenOSS #####

After installing it should open your browser on the ZenOSS

login page, if not just type on your browser:

http://localhost:8080/

The default login and password are:

Login: admin

Password: zenoss

Now you can just continue the "Installing Net-SNMP on Linux Clients" on the previous post that you cam find here.

Setting Up a SNMP Server in Ubuntu

Simple Network Management Protocol (SNMP) is a widely used protocol for monitoring the health and welfare of network equipment (eg. routers), computer equipment and even devices like UPSs. Net-SNMP is a suite of applications used to implement SNMP v1, SNMP v2c and SNMP v3 using both IPv4 and IPv6.

Net-SNMP Tutorials

http://www.net-snmp.org/tutorial/tutorial-5/

Net-SNMP Documentation

http://www.net-snmp.org/docs/readmefiles.html

# Installing SNMP Server in Ubuntu #####

$ sudo apt-get install snmpd

# Configuring SNMP Server #####

/etc/snmp/snmpd.conf - configuration file for the Net-SNMP SNMP agent.

/etc/snmp/snmptrapd.conf - configuration file for the Net-SNMP trap daemon.

Set up the snmp server to allow read access from the other machines in your network for this you need to open the file /etc/snmp/snmpd.conf change the following Configuration and save the file.

$ sudo gedit /etc/snmp/snmpd.conf

snmpd.conf

#---------------------------------------------------------------

######################################

# Map the security name/networks into a community name.

# We will use the security names to create access groups

######################################

# sec.name source community

com2sec my_sn1 localhost my_comnt

com2sec my_sn2 192.168.10.0/24 my_comnt

####################################

# Associate the security name (network/community) to the

# access groups, while indicating the snmp protocol version

####################################

# sec.model sec.name

group MyROGroup v1 my_sn1

group MyROGroup v2c my_sn1

group MyROGroup v1 my_sn2

group MyROGroup v2c my_sn2

group MyRWGroup v1 my_sn1

group MyRWGroup v2c my_sn1

group MyRWGroup v1 my_sn2

group MyRWGroup v2c my_sn2

#######################################

# Create the views on to which the access group will have access,

# we can define these views either by inclusion or exclusion.

# inclusion - you access only that branch of the mib tree

# exclusion - you access all the branches except that one

#######################################

# incl/excl subtree mask (opcional)

view my_vw1 included .1 80

view my_vw2 included .iso.org.dod.internet.mgmt.mib-2.system

#######################################

# Finaly associate the access groups to the views and give them

# read/write access to the views.

#######################################

# context sec.model sec.level match read write notif

access MyROGroup "" any noauth exact my_vw1 none none

access MyRWGroup "" any noauth exact my_vw2 my_vw2 none

# -----------------------------------------------------------------------------

# Give access to other interfaces besides the loopback #####

$ sudo gedit /etc/default/snmpd

find the line:

SNMPDOPTS='-Lsd -Lf /dev/null -u snmp -I -smux -p /var/run/snmpd.pid 127.0.0.1'

and change it to:

SNMPDOPTS='-Lsd -Lf /dev/null -u snmp -I -smux -p /var/run/snmpd.pid'

# Restart snmpd to load de new config #####

$ sudo /etc/init.d/snmpd restart

# Test the SNMP Server #####

$ sudo apt-get install snmp

$ sudo snmpwalk -v 2c -c my_comnt localhost system